Fritz Haber (1868 – 1934) is without a doubt one of the most interesting and controversial scientists to have ever lived. What made him unique is that his work represented the two extremes of scientific innovation – the ability to save lives and the ability to take lives. On one hand he revolutionised agriculture, allowing us to produce enough food to feed the growing global population, but during the First World War he turned his attention to chemical warfare and the extermination of the Allies. The story of his life is perhaps one of the greatest arguments for the necessity of ethics in science.

“A scientist belongs to his country in times of war and to all mankind in times of peace.” – Fritz Haber

World food crisis

At the end of the 19th century Germany had a problem. It was running out of food. This actually wasn’t just a problem for Germany, the whole world was running out of food. In fact, many people were worried that at a global population of 1.5 billion people there just wasn’t enough food to support further population growth. This became the issue for scientists at the time, how could they feed the growing population.

The root cause of the issue wasn’t the problem, they knew what they needed. Put simply, they needed more nitrogen.

Nitrogen is essential for plants to grow. I actually talked about the importance of nitrogen for plant growth in an earlier blog post. But to summarise it, without nitrogen there can be no life – seeds need nitrogen to grow, they need it to make the cells that will become the plant.

Now, there are a few places where you can get nitrogen and we used to get almost all of it from, well, poop. In particular, bat poop, otherwise known as guano. Guano was absolutely vital for 19th century agriculture. It was so important that the ‘Guano Era’ between 1845 and 1866 made Peru, thanks to it’s vast reserves, a very prosperous country. Spain even went to war with Peru for control of it’s guano-rich islands. It was basically the oil of its day. The problem was that there just wasn’t enough guano to support the level of agriculture needed to feed everyone.

Bread from the air

There was one nitrogen reserve though that hadn’t been tapped. Nitrogen, as you may know, makes up about 78% of the air we breathe. The trouble is, plants don’t take their nitrogen from the air, they take it from the soil.

Nitrogen in the air isn’t accessible to plants because it doesn’t float around in its simple form. It floats around fiercely bonded to another atom of nitrogen to form one of the strongest bonds in chemistry – a triple covalent bond. It doesn’t matter if you don’t know what that is, the important thing to know is that this type of bond requires large amounts of energy to break apart in order to access the usable nitrogen atoms. This makes it uneconomical for plants when there is much more readily accessible nitrogen in the soil.

This isn’t necessarily an obstacle for humans though and Fritz Haber, working at the time out of the University of Karlsruhe, Germany, started to think about how they could access this nitrogen. How could they break this triple bond.

In 1909 Haber came up with a solution. This solution was to take air and place it in a big tank under extremely high pressure and temperature and then introduce hydrogen. Under such extreme conditions the energy was enough to break the nitrogen triple covalent bond. This allowed hydrogen to elbow its way in and react with nitrogen to produce a new nitrogen-hydrogen bond, producing the chemical we know as ammonia.

Ammonia, unlike nitrogen in the air, is very accessible to plants and was therefore an excellent fertiliser. Haber had achieved the impossible, he had harnessed nitrogen from the air in what was arguably one of the most significant technological innovations in human history. He had produced ‘bread from the air’.

Today, over 170 million tons of ammonia is produced every year using what we now call the Haber process. It allowed the global population in the 19th century to exceed 1.5 billion to now sit at around 7 billion and is expected to further rise to 10 billion by 2050. It’s estimated that about half of the nitrogen in our bodies was made available to us directly by the Haber process.

It could be argued, then, that Fritz Haber enabled the existence of more life than any other person in history. Fittingly, he was awarded the Nobel Prize in 1918. The thing is, by 1918 he was already considered by many to be a war criminal.

Guns from the air

Haber’s work on ammonia earned him the directorship of a new institute, the Kaiser Wilhelm Institute for Physical Chemistry and Electrochemistry. This didn’t just come with new work commitments, it also came with an entirely new social circle. He was now regularly speaking with cabinet ministers and even the emperor himself. This would be distinction enough for anyone but it was especially so for Haber who was a true patriot. He was a man who sincerely loved his country.

Because of this, when the First World War broke out in 1914 he willingly volunteered for service. He quickly proved his worth by using the Haber process and ammonia for the production of nitric acid which was used for explosives. Previously, they would have used saltpetre imported from Chile but the Allies had almost full control of Chilean saltpetre as most of it belonged to British industries. But Haber had done it again and produced ‘guns from the air’ which allowed the Germans to enter the war on level footing despite the apparent disadvantage.

Despite this success, Germany was suffering defeats on the front lines which caused Haber to think of a new strategy. Drawing on previous experiments using chlorine gas as a weapon, Haber suggested driving the Allied soldiers out of the trenches with gas. Most German army commanders were opposed to the idea and wouldn’t let Haber use it. They called the use of gas ‘unchivalrous’ and ‘repulsive’ to poison men as one would rats. Not to mention, the Hague conventions of 1899 and 1907 prohibited the use of poison or poisoned weapons.

However, eventually Haber found a man willing to use the gas – Albrecht, Grand Duke of Württemberg who was trying to take the city of Ypres, Belgium.

The battle of Ypres, 1915

Haber arrived in Ypres in February 1915 and had his unit start setting up steel cylinders containing chlorine gas.

Haber’s unit was particularly interesting because rather than soldiers, he recruited physicists, chemists and other scientists who had to be rigorously trained in how to deal with the poison gas. Haber’s ‘gas pioneers’ were all intelligent men who Haber managed to convince that gas was the only option left available. His famous defense was that ‘it was a way of saving countless lives, if it meant that the war could be brought to an end sooner’.

Incidentally, one of these men was Hans Geiger, inventor of the Geiger counter and three others (James Franck, Gustav Hertz, and Otto Hahn) went on to win Nobel Prizes in their own fields after the war.

In total, the unit set up 5700 tanks of chlorine gas comprising over 150 tons of chlorine. Haber’s plan was to release the gas into the wind which would carry it over to the Allied trenches. They waited weeks for the right weather conditions but finally on April 22nd the wind was just right…

Some described it as a cloud but others just saw a low yellow wall inching closer as the gas crept along the battlefield. It was said that as it glided, slowly through no mans land, leaves shriveled, the grass turned the colour of metal and birds fell from the air. It took mere minutes to reach the trenches.

Soldiers gagged, choked and convulsed as it hit causing over 5000 casualties and 1000 deaths. It was the first successful deployment of a weapon of mass destruction.

Two days later, under more favorable conditions, another gas attack was attempted. This time resulting in 10000 casualties and over 4000 deaths. The New York Times reported on 26th April 1915 that ‘[The Germans] made no prisoners. Whenever they saw a soldier whom the fumes had not quite killed they snatched away his rifle … and advised him to lie down to die better.’

Death by chlorine gas was not a pleasant way to go. When breathed in the chlorine would react with water in the lungs forming hydrochloric acid that would literally melt the lung tissue. Survivors described it as ‘drowning on dry land’.

The aftermath

In total, over 70000 Allied troops lay dead at Ypres and about half as many Germans. Having seen the success of the gas attack Haber only wished the Germans had used it sooner.

The Kaiser, having heard of Haber’s success, was delighted with the effect of the first gas attack and Haber was promoted to Captain. A rare rank for a scientist. A party was thrown shortly thereafter to celebrate Haber’s new status.

However, shortly after Haber’s return to Berlin on May 1st, 1915, his wife, Clara Immerwahr, took his service pistol and shot herself in the chest. The body was found by their 13 year old son.

Although she left no suicide note it was generally believed that she killed herself in protest of her husbands actions in using poison gas. Previously she had publicly condemned his work as a ‘perversion of the ideals of science’ and ‘a sign of barbarity, corrupting the very discipline which ought to bring new insights into life’.

The following morning Haber left his dead wife and lone son and traveled to the Eastern Front where he was due to initiate another gas attack on the Russians.

The legacy of chemical warfare

The use of chlorine gas by the Germans opened the floodgates to gas usage by both sides in the First World War. The British first used chlorine gas in September 1915 followed by the first use of a new gas, phosgene, later in the year. The first use of mustard gas came in 1917 and, like phosgene, was actively used by both sides. The overall death toll due to poison gas weapons in the First World War was estimated to be around 1.3 million.

The thing was, by the end of the First World War both sides had invented gas masks and other methods to counteract a poison gas attack rendering it mostly useless. In the years after the war public opinion turned heavily against poison gas weapons and the Geneva Protocol, signed in 1925, prohibited the use of all poison gas or bacteriological methods of warfare.

In the Second World War, neither Germany or the Allies used any war gases in combat.

How Fritz Haber should be remembered

The questions remains, then, how should Fritz Haber be remembered? Is he the man that saved the world from starvation and enabled billions of lives through fertiliser? Or is he a war criminal who used the first weapon of mass destruction and opened the world up to chemical weapons?

Like almost every question of morality there is no black and white. He fed billions of people so he can’t be totally bad but a good man wouldn’t push poisonous gas into the lungs of other human beings. But does the good outweigh the bad? The world would certainly be a worse place without him. He killed thousands but he still saved billions. Can you call someone a good man based on mathematics?

There is no doubt that Haber was a highly intelligent man capable of brilliance. But to be remembered as a brilliant man you need to know how to use that brilliance and for that you need a conscience. You need to be able to look beyond yourself, to see how your actions affect others. You could say that he was blinded by patriotism or that he believed that he was acting for the greater good but he still chose to kill people to meet these ends. He showed no respect for the lives he was taking.

But, then, who are we to draw the moral line between good and evil? A lot of scientists, a lot of great scientists, have done things that some may call evil. During the Second World War many American physicists worked on the Manhattan Project which led to the development of the atomic bombs dropped on Hiroshima and Nagasaki that killed thousands of Japanese civilians. Are these scientists evil for not respecting the lives they took? Then again, dropping the atomic bombs ended the war, potentially saving more lives than it destroyed. But isn’t this exactly the justification Haber used to defend his poison gas?

I think that what is more important than labeling scientists as good or evil is using their example to show people how destructive science can be when performed without ethics. Science is not inherently good or evil but it is a tool that can be potentially used for both.

When society remembers a person it sends an important message about the morality of that society and the morals it expects from it’s people. This is why we should be careful about remembering Haber as good or evil because to do so would cause us to forget the other side. Society should always be aware of the potential destructive power of science when wielded without ethics lest it happen again.

Gold from the sea

For Haber, the rest his life was mired in tragedy and disappointment.

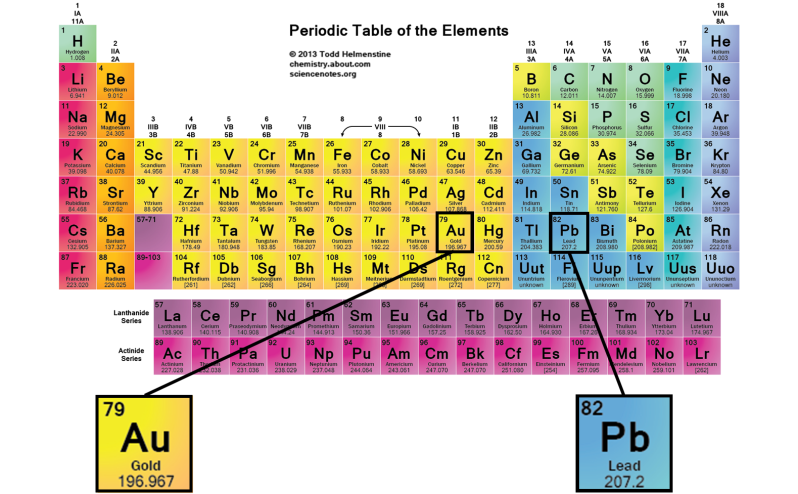

He felt humiliated by Germany’s loss in the war and especially by the huge reparations Germany had to pay. This humiliation made him feel personally responsible for the reparations and so he sought a way to pay them himself. His idea was to attempt to distill gold from the ocean water. Although it sounds ridiculous, ocean water does indeed contain small quantities of gold. The problem was that he severely overestimated the amount of gold there was. He spent five futile years of his life doing this before he concluded that it just wasn’t economically viable.

Then, in 1933, Hitler took control of Germany and one of his first actions was to expel all Jews from the civil service – this included scientists working in Haber’s institute. Haber was, in fact, a Jew but he had converted to Christianity back in 1893. That saved him but didn’t save many of his coworkers and friends. He was unable to save their jobs and resigned himself in protest. Despite his conversion to Christianity he was still seen by the Nazis as ‘Haber the Jew’ and so, fearing for his own safety, fled to England. The former patriot was said to have felt like he had lost his homeland.

Haber died of heart failure travelling through Switzerland in 1934 but not before repenting, on his death bed, his waging war with poison gas.

Haber’s tragic irony

One final tragedy befell Haber following his death.

In turned out that his institute had actually created another nitrogen based gas for use as an industrial pesticide. They called this gas Zyklon. A re-formation of this gas, known as Zyklon B, was used by the Nazis in the gas chambers that killed millions of Jews. Those killed included the children and grandchildren of Haber’s sisters as well as many of his friends that stayed behind.

He would never know.

The LHC is essentially a long circular tunnel surrounded by extremely powerful electromagnets. Into this tunnel we can introduce a stream of single protons which are then accelerated around and around the circular tunnel by the electromagnets (like in the .gif to the right). Eventually the protons reach almost the speed of light and when the protons have this much energy they can smash into other atoms smashing more protons off of them. This is our relevant point.

The LHC is essentially a long circular tunnel surrounded by extremely powerful electromagnets. Into this tunnel we can introduce a stream of single protons which are then accelerated around and around the circular tunnel by the electromagnets (like in the .gif to the right). Eventually the protons reach almost the speed of light and when the protons have this much energy they can smash into other atoms smashing more protons off of them. This is our relevant point.

Incidentally, there is one element lighter than helium and that is hydrogen which used to be the gas used in airships until the infamous Hindenburg disaster (pictured right). You see, although hydrogen is lighter and therefore gives better lift, it’s really flammable and even if every safety precaution is taken it only needs a spark…

Incidentally, there is one element lighter than helium and that is hydrogen which used to be the gas used in airships until the infamous Hindenburg disaster (pictured right). You see, although hydrogen is lighter and therefore gives better lift, it’s really flammable and even if every safety precaution is taken it only needs a spark…

Of course, now that we don’t just rely on cattle and milk to survive there is no selective pressure for our bodies to keep producing lactase so don’t expect that ~5% intolerant population to change much. In fact, it’s likely to get higher again as people with intolerance to milk are just as likely to breed as anyone else now. Such is evolution.

Of course, now that we don’t just rely on cattle and milk to survive there is no selective pressure for our bodies to keep producing lactase so don’t expect that ~5% intolerant population to change much. In fact, it’s likely to get higher again as people with intolerance to milk are just as likely to breed as anyone else now. Such is evolution.