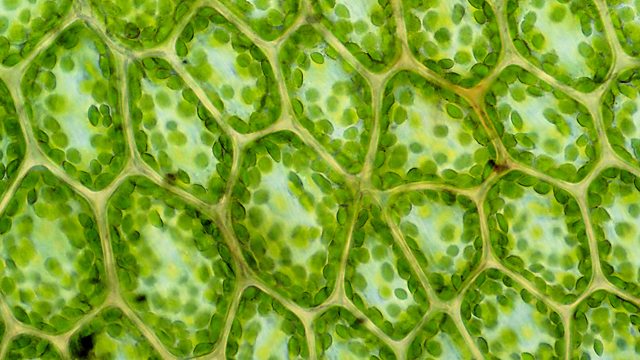

Microscopy is one of the most important techniques in all science. It has a ton of uses that continue to bring us closer to cures for diseases as well as teaching us more about the world around us. But despite the incredible advances made by modern microscopy nothing comes close to what was perhaps the most important discovery of all – indisputable proof of cells. It’s something that we take for granted these days but without microscopy our knowledge of human health would never have moved on from the middle ages. The discovery of cells catapulted us into a new era of science when we learned that they make up literally every living thing. This is the story of that journey.

Plant cells seen through a light microscope

“Cells.” BBC bitesize revision. bbc.co.uk/education/guidesz9hyvcw/revision/2. n.p. Web. 25 September 2016

So we’re going to start right at the beginning, the middle of the 17th century. Now, at this time microscopy had been around for about 50 years since it’s invention in 1590 but scientists regarded it as largely a worthless pursuit. Understandably perhaps, early microscopes couldn’t magnify all that much and the very idea of a micro world existing all around us would have been laughable fantasy at the time.

Robert Hooke (1635 – 1703)

That is with the exception of Robert Hooke, the brilliant English polymath who didn’t consider microscopy to be worthless at all. He actually liked it very much, so much so that he had a custom microscope (rather expensively) built to pursue his interest and with it he set out to look at a lot of things.

So many things, in fact, that he published a book of his findings, Micrographia, in 1665. This book turned out to be a pretty big deal because, not only was this the first book to ever publish drawings of things such as insects and plants seen through a microscope, it was also the first major publication of the newly formed Royal Society. Now, for those who don’t know how big this is, the Royal Society is extremely influential in the scientific world today. It commands great respect within the scientific community and funds over £42 million of scientific research. The crazy thing is, they may owe a large amount of their success to Micrographia which, to many people’s surprise, became an instant hit and the worlds first scientific best-seller and subsequently inspired wide public interest in microscopy. Without the start given to it by Micrographia who knows if the society would even still exist.

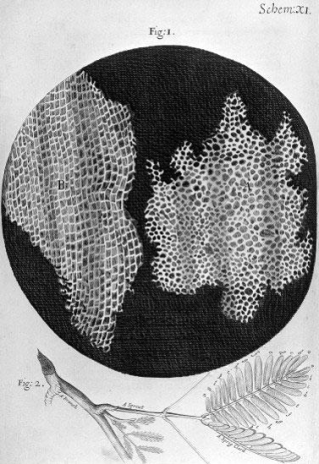

The interesting thing is, despite the wealth of detailed drawings and descriptions contained within the book, it was one particular image that ended up becoming Hooke’s legacy. In the picture you can see on the right, taken directly from Micrographia, he described a magnified cutting of dead cork tree. He said of the individual compartments that they reminded him of monk’s cells in a monastery. For this reason he named them ‘cells’. He didn’t realise that he was looking at the cell walls of plant cells but regardless, as the first person to describe them, we’ve used the word cell ever since.

The rest of Hooke’s legacy

With public interest in microscopy riding so high, many scientists were keen to leap on the microscopy bandwagon but, strangely, one of these scientists was not Robert Hooke.

Robert Hooke was nothing short of a genius and an industrious polymath who put microscopy on the back-burner and went on to contribute to countless other areas of science. To name a few; he postulated theories of gravity that preceded Isaac Newton; he stated that fossils were the remains of living things and went on to suggest that they be studied to learn more about Earth’s history; he used telescopes to study craters on the moon and the rings around Saturn; he came up with theories of memory capacity, memory retrieval and forgetting; not to mention that he was an accomplished architect who acted as the surveyor of London city and helped rebuild it after the great fire of London of 1666, going so far as to co-design multiple buildings that still exist today.

Remarkably, the scientist best credited with pushing microscopy forward was actually a Dutch cloth merchant called Antonie van Leeuwenhoek.

Antonie van Leeuwenhoek (1632 – 1723)

A cloth merchant is a strange start for a scientist but van Leeuwenhoek had already been using magnifying glasses to examine his threads and cloth for a while. The thing that made van Leeuwenhoek truly unique though was his ability to create high quality lenses. With public opinion so strongly in favour of microscopy, he set out to bend his considerable talent towards it.

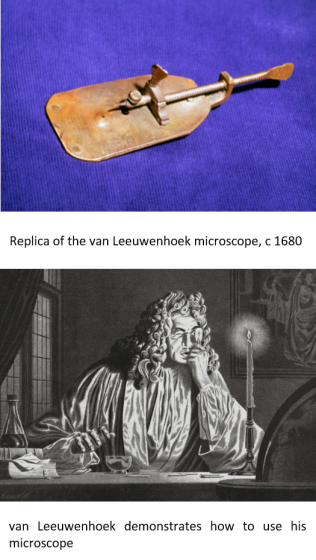

Armed with his lenses, van Leeuwenhoek actually managed to create a microscope that didn’t see an equal for over one hundred years. The downside was that his microscope was, admittedly, a very strange design.

The image on the right shows what it looked like and how you should hold it.

The lens is the tiny hole you can see in the middle of the large plate. The sample holder is that long screw that ends above the tiny hole in a fine point on which he would place the sample. The two screws allowed him to change the position of the sample slightly.

The lens is the tiny hole you can see in the middle of the large plate. The sample holder is that long screw that ends above the tiny hole in a fine point on which he would place the sample. The two screws allowed him to change the position of the sample slightly.

The issue, as you can see from the lower image on the right, is that the microscope had to be held as close to the eye as possible. This was because his small lenses needed the sample to be very close for them to be in focus. His strongest lenses, for example, needed the sample to be about one millimetre away…

He may have looked silly doing it but the things he discovered were anything but. He became the first person to see red blood cells in 1674 and then human sperm cells in 1677. He followed that up with countless observations of microorganisms found in pond water. With these observations he became the first person to describe bacteria. Of course, he didn’t know what bacteria were but based on what they looked like and the way they moved van Leeuwenhoek recognised that they were living things. For this reason he called them ‘animalcules’ which means something like ‘small animal’.

van Leeuwenhoek’s animalcules

Now, what we have to remember about this time was that no one knew a single thing about cells. Although van Leeuwenhoek had seen red blood cells and bacteria, no one knew what they were or what they did. It wasn’t known that red blood cells carry oxygen around the body (in fact, they weren’t even aware of the existence of oxygen) and as far as disease was concerned, the miasma theory was still the prominent idea.

The miasma theory is really interesting actually. I go into a bit more detail about it in my post about the black death but, essentially, it was believed that disease came from ‘bad air’. It was thought that bad areas of air contained poisonous materials that caused disease and they could be located by the terrible smell. They didn’t believe that people (or animals) couldn’t pass disease to each other, rather people in the same area got infected because they were in the same area of bad air. This theory is really well exemplified by the disease malaria which is spread by mosquitos living typically around smelly swampland. Malaria in medieval Italian is a literal description of the idea – mala aria = bad air.

For this reason, instead of demonising bacteria as the disease causing agents that some are, van Leeuwenhoek, a devout Calvinist, wrote about how great God was to provide the Earth with so many examples of life both great and small.

van Leeuwenhoek the businessman

Getting back to the microscopes, it was well known that van Leeuwenhoek’s microscope design was strange, so strange, in fact, that no other scientist of the time used it. But that’s just one reason. The other, more important, reason was that his microscopes were simply the best in the world and not one other scientist was able to reconstruct them. They had the highest magnification and best resolution and no other microscopes existed that could come close. They were so good that we weren’t able to recreate a van Leeuwenhoek microscope until the 1950’s…

This meant that van Leeuwenhoek had a virtual monopoly on all microscopic research. No other scientist could compete. Ever the businessman, van Leeuwenhoek was completely aware of this which is why he never revealed the microscopes he used for his research. He worked alone and when visited by notable figures and other scientists he only showed them lesser lenses so he would always stay at the top of his field.

(Incidentally, if you think that his behaviour was selfish because sharing this information could have advanced science faster… Don’t go into research.)

Robert Hooke himself even lamented that the whole field of microscopy was resting on just one man’s shoulders.

van Leeuwenhoek the scientist

Despite this practice, van Leeuwenhoek contributed massively to the field of microscopy and microbiology. Although he never trained as a scientist, he was a highly skilled amateur whose research was of a very high quality. His talent for making lenses was certainly not wasted, he made over 500 lenses and over 200 microscopes – each one individually hand-made to provide different levels of magnification. By the end of his life he’d sent over 560 letters to the Royal Society and other institutions detailing his discoveries. For this reason he’s considered to be the father of microbiology and the world’s first microbiologist.

Unable to compete, other scientists focused instead on creating new microscopes that would provide better magnification and resolution than van Leeuwenhoek’s designs. They succeeded, eventually. But it was close to one hundred years before these new microscopes were able to even match Van Leeuwenhoek’s.

Improvements in microscopy

And so, in 1832, over one hundred years after van Leeuwenhoek’s death, microscopy yet again came into the spotlight. This time our unlikely hero was an Englishman, Joseph Jackson Lister, who owned a wine business but was deeply interested in natural history.

He wanted to use a microscope to investigate plants and animals but noticed that the microscopes of the time had pretty poor resolution which made it impossible to see the very small things he wanted to see. So he sought to change that.

The fine details of what he did are complex and a bit out of the scope of this blog but basically, the issue microscopes had at the time was that when light entered the microscope, not every colour of the spectrum was focused on the sample. This was a problem because the unfocused colours caused distortions and coloured halos on the image. What he did was to invent a new type of lens, an achromatic lens, that focused all the colours of light onto the sample which drastically improved the resolution. In short, what he did was to perfect the optical microscope. What’s more, he had a wine business to run so he did this all in his spare time.

Unfortunately, that’s all we have to say about Joseph Jackson Lister. Although I’d like to take a quick aside to say that if his name sounds familiar it’s because his son, Joseph Lister, was the famous surgeon, pioneer of antiseptic surgery and widely regarded as the father of modern surgery.

But for our story, Joseph Jackson Lister merely provided the microscope, we’re interested in a couple of men who used it: Matthias Schleiden and Theodor Schwann.

Matthias Schleiden (1804 – 1881)

Schleiden was yet another interesting case. A German man who originally trained and practiced as a lawyer but abandoned law to pursue his hobby – botany. Thus, he studied botany at university and then moved on to teach it as a professor of botany at the University of Jena.

Schleiden disagreed with the botanists of the time, who, in his opinion, were devoted only to naming and describing plants. What Schleiden wanted to do was to really study them, and he wanted to study them microscopically.

It was through this study, using Joseph Jackson Lister’s microscope design, that, just like Hooke, he realised that plants were all made up of recognisable blocks, or cells. With this discovery he wrote his famous article, ‘Contributions to Phytogenesis‘, in 1838, in which he proposed that cells were the most basic unit of life in plants and that plant growth was caused by the production of new cells.

What he was telling us was that, in plants, cells are the building blocks of life, the reason for growth. Plants are made of cells and they grow when new cells are made

He was so close to cell theory, the problem was, he only made these conclusions about plants. But this all changed after one fateful night of dining with his colleague, Theodor Schwann.

Theodor Schwann (1810 – 1882)

Schwann worked in the same university as Schleiden but Schwann, by contrast, studied animals, and over dinner they began talking about their work. Schwann immediately noticed the similarities in Schleiden’s description of plant cells with things he’d seen in animals and the pair wasted no time connecting the two phenomena.

What happened next was the publication of perhaps one of the most important books in the history of biology; Schwann and Schleiden’s Microscopical Researches into the Accordance in the Structure and Growth of Animals and Plants (1847).

In this book they concluded that the cell is the basic unit of all life and set out the two basic tenets of cell theory:

1. All living organisms are composed of one or more cells

2. The cell is the basic unit of structure and organization in organisms

This was a huge deal. I can’t even begin to explain what this meant for our understanding of life. I’d go so far as to say that everything we know about life today came from this one idea.

Reconciling science with religion

If there was one issue with Schwann and Schleiden’s book, it was that they missed the last crucial component of cell theory; the question of how new cells formed was very much still contested.

The leading thought at the time was that of spontaneous generation. This theory, heavily influenced by religion, was that life could spontaneously arise from nothing, or in the very least some kind of inanimate matter. This explained how maggots seemed to form on dead flesh or how fleas formed from dust.

What many now believe to be a concession to religion, Schwann and Schleiden wrote that new cells formed from inanimate matter within existing cells. This allowed them to maintain that cells were the building blocks of life, but at the same time attributing the formation of new cells to God.

Many doubt that Schwann believed this but Schleiden was known to favour the spontaneous generation theory and by including it in the book allowed it to gain favour with the public that led to the books great success.

The last tenet

It wasn’t until 1855, when Rudolf Virchow, pursuaded by the work of Robert Remak, published his article Omnis cellula e cellula (All cells [come] from cells), that we ended up with our final tenet of cell theory:

3. Cells arise from pre-existing cells.

This stated that the only source for a living cell was another living cell and thus completed what we now consider to be cell theory.

Since then, new tenets have been added but these three tenets have been unchanged as the basis for cell theory for more than 150 years.

So the next time someone tells you that microscopy is boring, tell them that without it we would know nothing about life on this planet.

Of course, now that we don’t just rely on cattle and milk to survive there is no selective pressure for our bodies to keep producing lactase so don’t expect that ~5% intolerant population to change much. In fact, it’s likely to get higher again as people with intolerance to milk are just as likely to breed as anyone else now. Such is evolution.

Of course, now that we don’t just rely on cattle and milk to survive there is no selective pressure for our bodies to keep producing lactase so don’t expect that ~5% intolerant population to change much. In fact, it’s likely to get higher again as people with intolerance to milk are just as likely to breed as anyone else now. Such is evolution.

As of now smallpox is the only disease to be eradicated entirely due to vaccination but many diseases are well on the way.

Polio is almost completely eradicated with only 56 cases so far in 2015, it’s already considered eradicated in the Americas and Europe.

Measles, mumps and rubella (German measles) are also almost completely eradicated in the Americas and Europe with just a handful of cases each in 2015. However, these diseases have actually been rising again over the last couple of years and that is all thanks to the belief that the MMR vaccine causes autism…